Language Modeling using RNNs

CMU 11-785 Course: Deep Learning

Language Modeling —

A language model is a distribution of word sequences in a language. A word/token sequence is a complete string of tokens that have a definite beginning and an end.

Language modeling involves predicting the probability distribution of words or tokens in a sequence given a context or history of previous words. One way to approach language modeling is to use start of sequence (<sos>) and end of sequence (<eos>) markers to distinguish different token sequences.

A complete sentence would be:

<sos> I love mangoes. <eos>

Both tags must be included in the neural network’s vocabulary. For example, our language model would be over alphabets (a-z, A-Z), punctuation marks (‘,’, ‘.’, etc), spaces (‘ ‘), sos and eos tags (<sos>, <eos>).

Computing the Probability of a Complete Token Sequence —

In order to do this, the language model needs to assign a probability to each token in the sequence, conditioned on the history of previous tokens. This is done by modeling the conditional probability distribution of each token given the previous tokens in the sequence, using techniques such as recurrent neural networks (RNNs) or transformers. The probability of the complete sequence is then obtained by multiplying together the conditional probabilities of each token in the sequence.

The language model can be trained to optimize this probability by minimizing the loss between the predicted probabilities and the true target distribution of the next token in the sequence. The resulting language model can be used for a wide range of tasks, such as machine translation, text generation, and sentiment analysis.

Token Embedding —

One way to represent every token in the vocabulary including <sos> and <eos> is a one-hot vector. However, this is extremely wasteful because of the high dimensionality. We would have to project these vectors down to a lower dimensional space using a linear transformation.

So, to compute the probability of any complete token sequence ($t_{1}, t_{2}, ... , t_{N}$) using an RNN:

Add <sos> before the sequence and <eos> after the sequence: $$<sos>, t_{1}, t_{2}, ... , t_{N}, <eos>$$

Pass this token sequence through the network to compute a probability distribution over the vocabulary at each step: $$<sos>, t_{1}, t_{2}, ... , t_{N}$$

At each time step, $n = 0, 1, …, N$ where $t_{0}=<sos>$ and $t_{N+1}=<eos>$, compute the embedding for the $n^{th}$ token, pass it to the network to compute the probability distribution for the next token, read out the probability for the next token

Multiply the sequences of probabilities

Train a Language Model —

We would like to train a model that computes $P(t_{n} | <eos> t_{1}, …, t_{n-1}; \theta)$.

Since the language model is a parametric model for a probability distribution, we can use maximum likelihood estimation (MLE) for the training. The goal of MLE is to maximize the likelihood of the observed data given the parameters of the model. In the case of language modeling, the observed data consists of a set of training sequences, and the parameters of the model are the probabilities of each token given the previous tokens in the sequence.

The likelihood function is the probability of the observed training sequences given the model parameters. After this is defined, take the logarithm of the likelihood function, which simplifies the computation and converts the product of probabilities into a sum of logarithms. Finally, minimize the negative log-likelihood using an optimization algorithm such as stochastic gradient descent (SGD), which updates the model parameters iteratively.

During training, the language model learns to assign high probabilities to the tokens that are most likely to occur given the previous tokens in the sequence, and low probabilities to the less likely tokens. The resulting model can be used to predict the probability distribution over tokens for a given input sequence, and generate new text by sampling from this distribution.

In this assignment, I trained an RNN language model on the WikiText-2 Language Modeling Dataset. The tasks were to predict the next token and generate sequences. The metric for evaluation was the negative log-likelihood.

Regularization Techniques for the RNN LSTM-based Model —

To improve the model’s performance, here are some techniques:

Locked dropout / variational dropout

This technique applies dropout to the recurrent connections of the RNN, but the same dropout mask is used at each time step during training. This helps to prevent the RNN from overfitting to the sequence at a particular time step, and encourages it to learn more robust and generalizable representations.

Embedding dropout

This technique applies dropout to the input embeddings of the language model, which helps to prevent the model from relying too heavily on individual words in the input sequence.

Weight decay

This technique adds a penalty term to the loss function during training, which encourages the model to have smaller weights. This helps to prevent the model from becoming too complex and overfitting to the training data.

Weight tying

This technique shares the weights of the input and output embeddings of the language model, which reduces the number of parameters in the model and helps to prevent overfitting.

Data augmentation

This technique involves generating new training examples by applying various transformations to the existing data, such as adding noise or perturbing the input sequence. This helps to increase the size and diversity of the training data, which can improve the generalization performance of the model.

Temporal activation regularization

This technique involves adding a penalty term to the loss function during training that encourages the activations of the hidden units in the RNN to be smooth and continuous over time. This helps to prevent the RNN from overfitting to sudden changes or fluctuations in the input sequence, and encourages it to learn more stable and consistent representations.

Example: RNN for Machine Translation

Here, I would go over a demonstration on the use of RNN (GRU-based) on a machine translation task.

Reference: Language Translation with RNNs

Task—

Build a deep neural network that functions as part of a machine translation pipeline. The pipeline accepts English text as input and returns the French translation. Compare accuracy across various model architectures.

Steps —

Preprocessing: load and examine data, cleaning, tokenization, padding

Modeling: build, train, and test the model

loss=sparse_categorical_crossentropy, optimizer=Adam(learning_rate), metrics=['accuracy'], batch_size=1024, epochs=10, validation_split=0.2

Prediction: generate specific translations of English to French, and compare the output translations to the ground truth translations

Iteration: iterate on the model, experimenting with two different architectures: RNN (with GRU-based model), and an RNN with an embedding layer

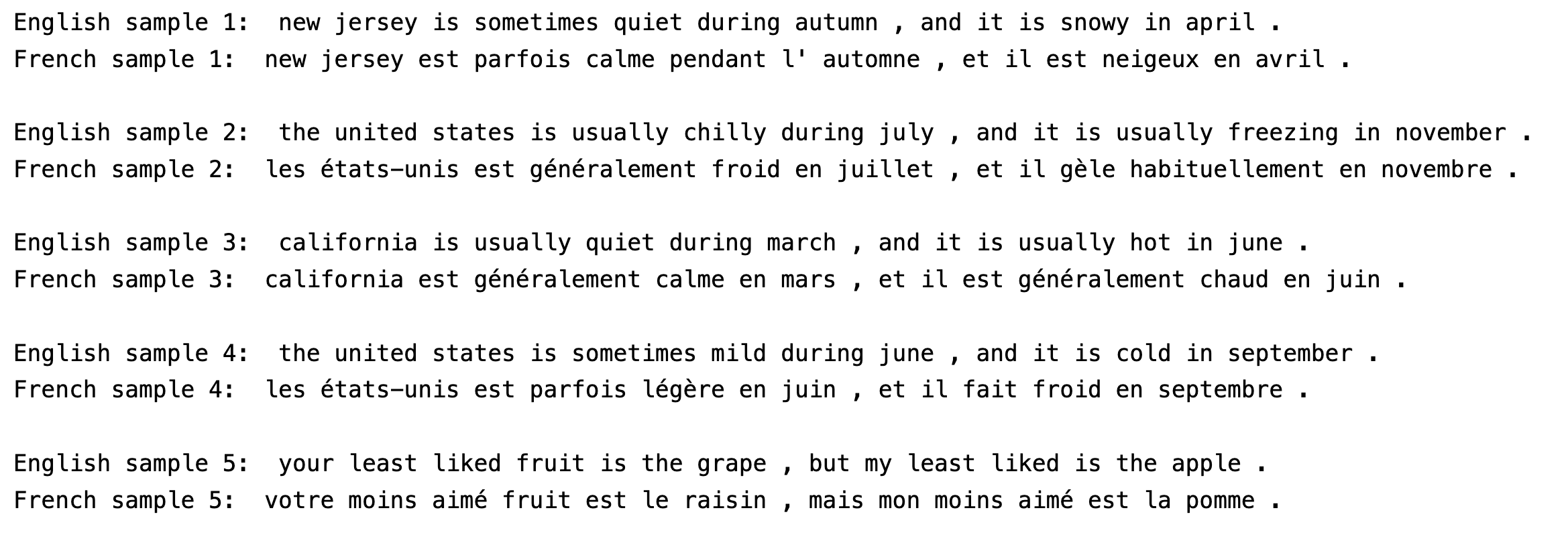

The dataset

Tokenizing the text sentence input

Make sure all the English sequences have the same length and all the French sequences have the same length by adding padding to the end of each sequence

Simple RNN model with GRU

def simple_model(input_shape, output_sequence_length, english_vocab_size, french_vocab_size):

"""

Build and train a basic RNN on x and y

:param input_shape: Tuple of input shape

:param output_sequence_length: Length of output sequence

:param english_vocab_size: Number of unique English words in the dataset

:param french_vocab_size: Number of unique French words in the dataset

:return: Keras model built, but not trained

"""

# Hyperparameters

learning_rate = 0.005

model = Sequential()

model.add(GRU(256, input_shape=input_shape[1:], return_sequences=True))

model.add(TimeDistributed(Dense(1024, activation='relu')))

model.add(Dropout(0.5))

model.add(TimeDistributed(Dense(french_vocab_size+1, activation='softmax')))

# Compile model

model.compile(loss=sparse_categorical_crossentropy,

optimizer=Adam(learning_rate),

metrics=['accuracy'])

return model

RNN Model with Embeddings

def embed_model(input_shape, output_sequence_length, english_vocab_size, french_vocab_size):

"""

Build and train a RNN model using word embedding on x and y

:param input_shape: Tuple of input shape

:param output_sequence_length: Length of output sequence

:param english_vocab_size: Number of unique English words in the dataset

:param french_vocab_size: Number of unique French words in the dataset

:return: Keras model built, but not trained

"""

# Hyperparameters

learning_rate = 0.005

model = Sequential()

model.add(Embedding(english_vocab_size, 256, input_length=input_shape[1], input_shape=input_shape[1:]))

model.add(GRU(256, return_sequences=True))

model.add(TimeDistributed(Dense(1024, activation='relu')))

model.add(Dropout(0.5))

model.add(TimeDistributed(Dense(french_vocab_size, activation='softmax')))

# Compile model

model.compile(loss=sparse_categorical_crossentropy,

optimizer=Adam(learning_rate),

metrics=['accuracy'])

return model

Insights

After 10 epochs, here are the metrics:

RNN: loss: 0.7891 - accuracy: 0.7287 - val_loss: 0.6902 - val_accuracy: 0.7620

RNN with Embedding: loss: 0.1796 - accuracy: 0.9355 - val_loss: 0.1819. - val_accuracy: 0.9367

Adding an embedding layer significantly improves the performance of the RNN-based language model. The first model, which uses a plain RNN architecture, achieves a moderate training accuracy of 0.7287 and a validation accuracy of 0.7620. However, the second model, which incorporates an embedding layer, achieves a much higher training accuracy of 0.9355 and a validation accuracy of 0.9367. This suggests that the addition of an embedding layer has enabled the model to learn more accurate and informative representations of the input sequences, which has improved its ability to predict the correct translations.